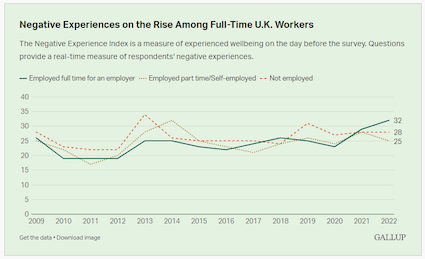

Last year, the UK came out as one of the most emotionally distressed workforces in the world.

Full-time UK employees scored 32 on Gallup’s Negative Experience Index. This is up substantially from 23 in 2020. Full-time employees in the UK are now some of the most emotionally distressed in Europe, second only to employees in Malta (39).

These stats from the 2023 Work Trend Index: Annual Report add further detail:

- 3 x more meetings/calls each week since Feb 2020

- 64% say they don’t have enough time/energy to do the job

- 60% of leaders say lack of innovation is a concern

Clearly we need a solution… enter AI

The demand for artificial support is strong: In the same index, 70% say they would delegate as much work as possible to AI to lessen the workloads. But 49% of people say they’re worried AI will take their jobs.

It’s an interesting juxtaposition.

Clever use of automation can give us back time to do more of the stuff that we love, are great at and where we offer most value.

It seems a no brainer.

This gift of extra time might also help address the statistic around a lack of innovation, without the need to deploy more generative AI.

However, I advocate a more mindful, selective use of it.

The human impact

When making a decision about whether or not to use AI, we need to take into account not just whether it is a productive move, but also consider the human impact.

After all, why are we looking to be productive in the first place? Businesses are people, for people. So, should we be looking at the potential people cost? And what impact could that be having on our deeper engagement levels?

When more of the essential human elements of our work are delegated to AI, are we beginning to delegate our relevance? The less relevant that people feel, the less connected and engaged they feel. And are we missing something essential in blind service to more, more quickly?

Let’s take the example of producing content

Maybe a blog or a vlog on a topic that you’re passionate about, sharing a clear point of view. A bit like this.

Trust is such an essential currency in business, and a key element to trust is intimacy.

A significant part of the impact of that work is not just what it says, but the intimacy created through the knowledge that this person has spent time to think about what they would like to write, and then the endeavour to articulate it as best as they can in their own, unique, imperfect way.

You are reading me.

This impact is lost when I know or suspect that the content idea has been spat up and then written by AI. The ‘writer’s’ only contribution being that they’ve maybe edited it a bit.

If the intention is the pure sharing of information, then perhaps this works. If it’s sharing a point of view or feeling - if it’s positioned as a personal piece, then it feels more problematic.

You end up with a saturated theatre of lots and lots of meaningless stuff.

Content for content’s sake.

What’s the point? Where’s the intimacy?

Operating in spaces like this, either as the ‘faux writer’ or reader is bound to lead to greater disconnection.

Disconnection, lack of trust, are already an issue in financial services, we don’t need to add to it.

The same danger can be flagged for other channels of communication - an email, a slide deck, a speech, a presentation, a bit of training.

We run the risk of removing the very human, nuance and imperfection of our communication and connections to leave us all feeling a little alienated.

I’m not even challenging the ability for generative AI to produce great content, communicate really well and even get better at imitating our imperfections. It’s only going to get better and better.

But at what cost? Just because it can, doesn’t make it right - or good for us

And if we’re programming AI to imitate our unique style and imperfections, why don’t we just embrace them ourselves, at source, and get comfortable putting that imperfection out there?

“I don’t have time. That’s why.”

It seems that we might have made an artificially generated rod for our own backs:Because everyone’s using AI to get content out there, the sheer volume of content demanded is simply too time consuming for a human to produce.

But if more people committed to communicating entirely authentically across channels, the demand for more, more quickly would subside. Purely because people can’t do it. With a greater emphasis placed back on quality, and a reconnection to why we’re communicating in the first place.

So, some conclusions:

This is not intended as an anti-AI piece.

There are opportunities that we must embrace. Why wouldn’t we?

However, we cannot neglect the human impact when making decisions about AI moving forward - both individually and organisationally.

That’s why we need a very intentional, human-centric approach that honours the values of your organisation and serves long-term, sustainable high performance.

If we continue to delegate what’s essentially human and brilliant about ourselves and our teams to AI, all in service to a narrow definition of productivity (more, more quickly) we also run the risk of delegating our essential meaning and purpose. Once we lose this, we lose everything.

My commitment:

For what it’s worth: I promise that any piece of content that I share that says, ‘by Chris Wickenden’, will be just that. I will have thought deeply about it, have taken time to articulate it in my own, imperfect words, and will be sharing because I care and believe in it.

Not because I’m special in any way, but because I value authenticity too highly and I think that without it, business - life - loses meaning.